ONGOING RESEARCH/ PROJECTS

.png/:/cr=t:0%25,l:0%25,w:100%25,h:100%25/rs=w:400,cg:true)

Neural Voice Cloning With few Samples

Voice cloning is a highly desired feature for personalized speech interfaces. We introduce a neural voice cloning system that learns to synthesize a person’s voice from only a few audio samples. We study two approaches: speaker adaptation and speaker encoding. Speaker adaptation is based on fine-tuning a multi-speaker generative model. Speaker encoding is based on training a separate model to directly infer a new speaker embedding, which will be applied to a multi-speaker generative model. In terms of the naturalness of the speech and similarity to the original speaker, both approaches can achieve good performance, even with a few cloning audios. While speaker adaptation can achieve slightly better naturalness and similarity, cloning time, and required memory for the speaker encoding approaches are significantly less, making it more favorable for low-resource deployment.

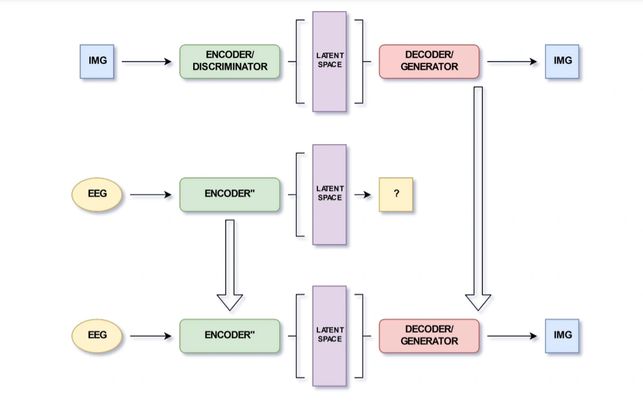

Visual Reconstruction of Image from Spoken Word using EEG

In this project, the main motivation was to construct images from

a person’s spoken words. We have designed and trained a deep

neural network to perform this task using a large data set of people

brain EEG signals. Thus, have collected an EEG dataset consisting of

2000 audio and visualization events which can be used to analyze

temporal visual and auditory responses to spoken word stimuli. And

finally, everything was implemented on the MNIST data set

Generative Modelling of Images from SpeechSpeech2Face

In this project, the main motivation was to infer about a person’s look from the way they speak. We design and train a deep neural network to perform this task using thousands of natural YouTube videos of people speaking. During training, our model learns voice face correlations, and then we used it for voice recognition to evaluate the efficiency of our model. The training is done in a self-supervised manner, by utilizing the natural co-occurrence of faces and speech in Internet videos, without the need to model attributes explicitly.

DOUBLE CONVOLUTION NEURAL NETWORK (DCNN)

Building large models with parameter sharing account for most of the success of deep convolutional neural networks (CNNs). In this paper, we propose doubly convolutional neural networks (DCNNs), which significantly improve the performance of CNNs by further exploring this idea. Instead of allocating a set of convolutional filters that are independently learned, a DCNN maintains groups of filters where filters within each group are translated versions of each other. Practically, a DCNN can be easily implemented by a two-step convolution procedure, which is supported by most modern deep learning libraries. We perform extensive experiments on three image classification benchmarks: CIFAR-10, CIFAR-100, and ImageNet, and show that DCNNs consistently outperform other competing architectures. We have also verified that replacing a convolutional layer with a doubly convolutional layer at any depth of a CNN can improve its performance. Moreover, various design choices of DCNNs are demonstrated, which shows that DCNN can serve the dual purpose of building more accurate models and/or reducing the memory footprint without sacrificing accuracy.

Copyright © 2020 VisionBrain - All Rights Reserved.

Powered by ia|ai